Uniformly Faster Gradient Descent of Varying Step Sizes for Smooth Convex Functions

Program:

Applied Mathematics and Statistics

Project Description:

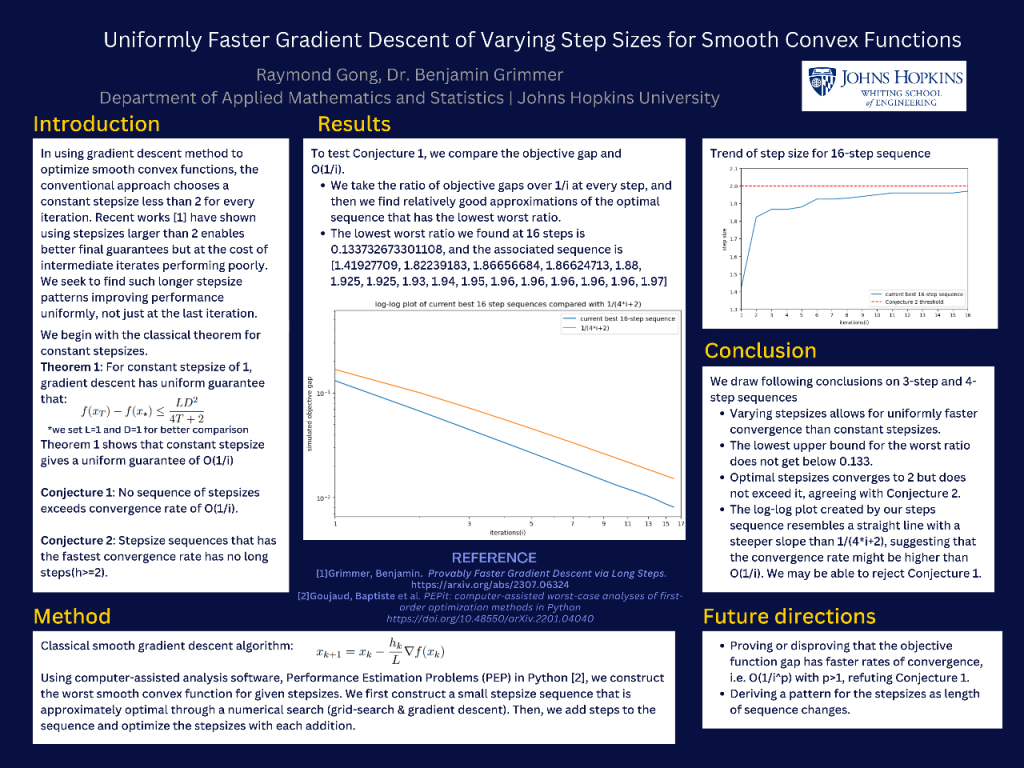

In using gradient descent method to optimize smooth convex functions, the conventional approach chooses a constant stepsize less than 2 for every iteration. Recent works [1] have shown using stepsizes larger than 2 enables better final guarantees but at the cost of intermediate iterates performing poorly. We seek to find such longer stepsize patterns improving performance uniformly, not just at the last iteration.

Team Members

-

[foreach 357]

-

[if 397 not_equal=””][/if 397][395]

[/foreach 357]

Project Mentors, Sponsors, and Partners

-

Benjamin Grimmer

Course Faculty

-

[foreach 429]

-

[if 433 not_equal=””][/if 433][431]

[/foreach 429]